It’s safe to say that Artificial Intelligence is having a moment. Specifically, public chatbots like ChatGPT and Microsoft’s Bing harness the power of AI to write convincing school essays, construct resumes and cover letters, and, occasionally, express a yearning for sentience.

To me, these programs sound like steroidal autocomplete features, but they’re demonstrably smart. They can pass the U.S. Medical Licensing Exam. They score high on IQ tests. They can even pass the Turing Test — meaning that they can convince people that they are people, not steroidal autocomplete features.

But can these tools work as an LSAT tutor? It’s a natural question for us at LSATMax to ask. Even the name of these services — Large Language Model — shares the initials of an advanced legal degree. We think we do a pretty good job hiring, training, and coaching our tutors to provide excellent advice to our students. But what if a robot can do a better job?

And besides, I have a bit of a personal interest riding on this exercise. I make the content at LSATMax. If ChatGPT can spit out top-notch LSAT content at the drop of a figurative hat, it’s over for yours truly. I may as well pack up my bags and head to the poor house right now.

To test whether AI can be a trusty LSAT advisor, I fed ChatGPT a prompt similar to the one I give to candidates interviewing to tutor for LSATMax. How does ChatGPT stack up against its human counterparts?

Here’s what I asked ChatGPT:

“Hi ChatGPT, I want to see if you can help a student study for the Law School Admissions Test, also known as the LSAT.

“This student already knows what the LSAT tests and is currently taking practice LSATs and reviewing LSAT material in between each test.

“The student wants to earn a score of 170 on the LSAT. However, they’ve reached a bit of a “score plateau” in their practice LSAT scores. They’ve been getting this same score over and over again. On their last practice LSAT, they answered 35 of the 51 Logical Reasoning questions correctly, 20 out of the 23 Logic Games questions correctly, and 18 out of the 27 Reading Comprehension questions correctly. How do they improve enough to earn a 170 on the LSAT? Specifically, do you have any tips on how they can approach Logical Reasoning, Logic Games, and Reading Comprehension questions more effectively?”

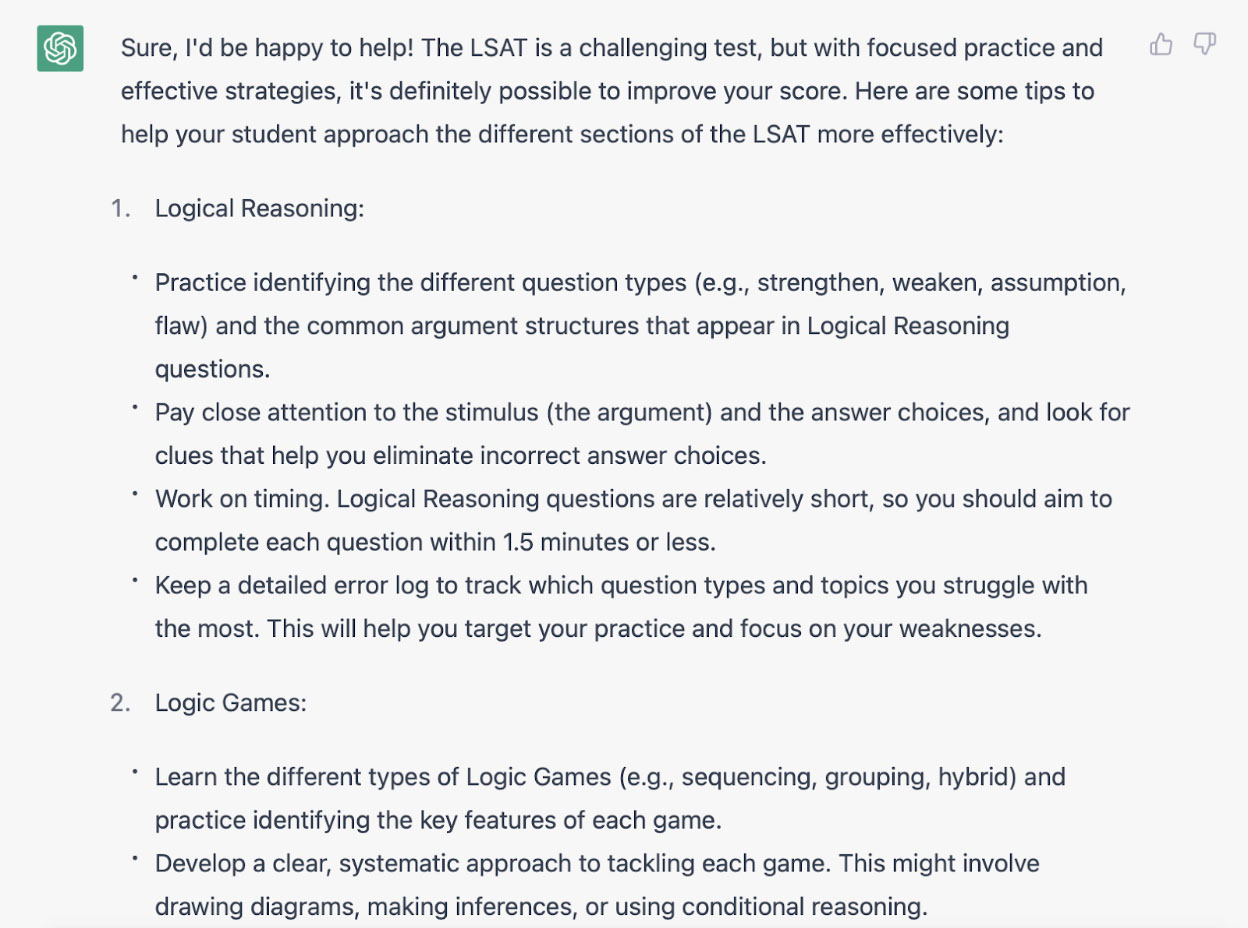

Here’s its response:

Honestly, ChatGPT, pretty good job here. You have my ears.

ChatGPT’s response provides a not-bad outline for how to study for the LSAT. It’s hard to find anything too objectionable in its recommendations. I could quibble with the notion that all Logic Games and Reading Comp passages should take between 8 and 9 minutes. Some will take more time, some less. But this isn’t disqualifying advice or anything.

However, this advice is a touch too generalized for my taste. I asked about a student who was scoring very well in Logic Games, but ChatGPT didn’t mention that this student should obviously spend more of their time on Logical Reasoning and Reading Comp. A tutor who can’t develop a personalized plan based on a student’s individual needs is not ideal.

And this advice is quite vague. In Logical Reasoning, for instance, ChatGPT mentions identifying “different question types,” “common argument structures,” and “clues that help you eliminate incorrect answer choices.” But it doesn’t specify what any of those are.

So I decided to dig a little deeper and ask a follow-up question:

“Thanks. You said that on Logical Reasoning, you can look for ‘clues that help you eliminate incorrect answer choices.’ Do you know any of these clues?”

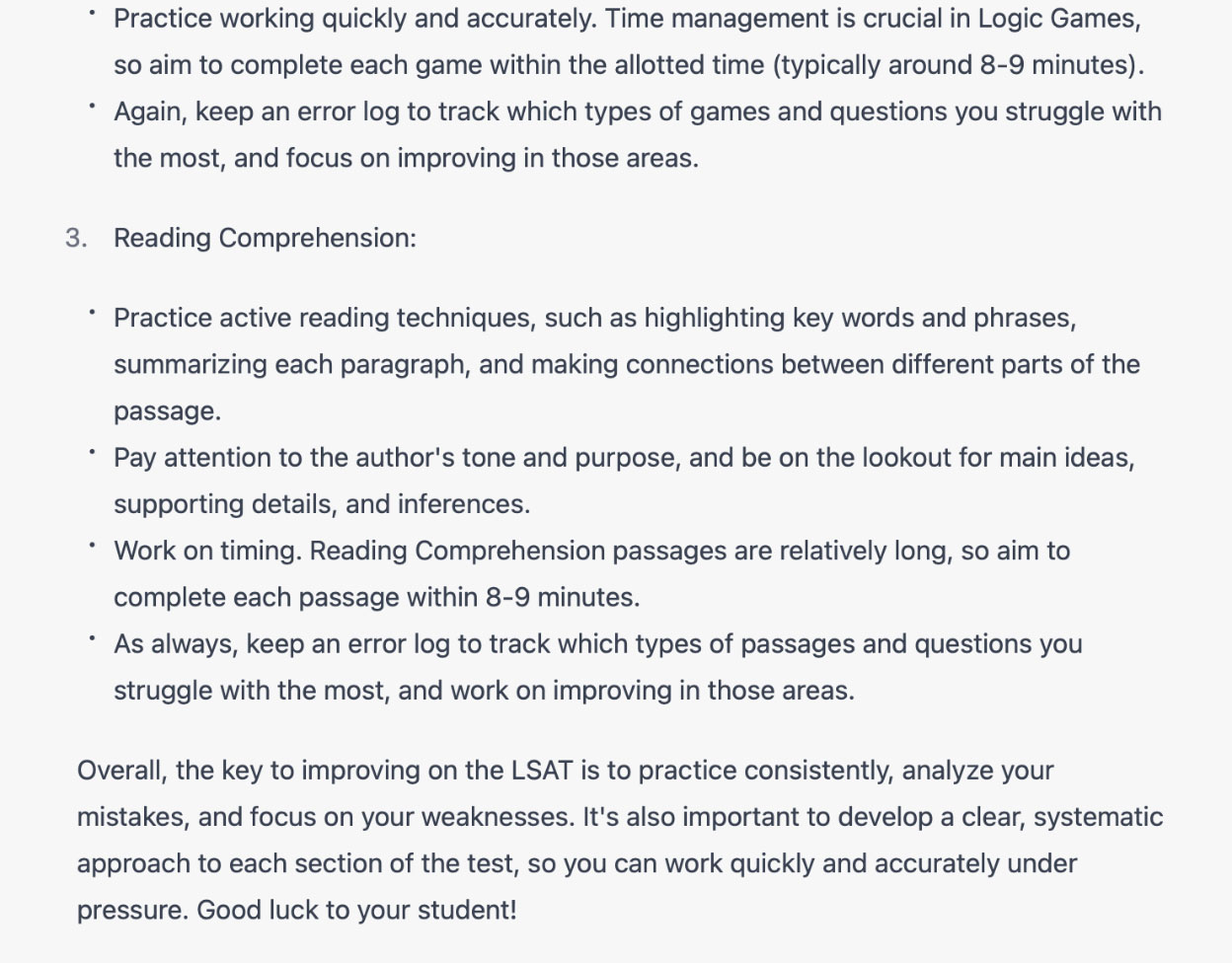

Here’s Mr. Robot’s response:

OK, Robot Tutor, I’m going to have to object — respectfully, of course, I know how angry you sometimes get — to a few of these notions.

Let’s break a few of these down.

- Extreme language: The idea that we should always — or even usually — avoid answer choices with extreme language is wholly misguided. It all depends on the type of Logical Reasoning question you’re doing. Some questions ask you to make an inference from a set of facts. On these questions, answer choices with extreme language are harder to support and thus often (but not always) incorrect. But other questions ask you to strengthen or weaken an argument. Some questions ask you to resolve a set of discrepant facts. On these questions, answer choices with extreme language are actually more likely to strengthen or weaken your argument or resolve the facts. Extreme language is a good thing on these questions!

- Out-of-scope answers: The idea that you should avoid out-of-scope answer choices is not only untrue, but it can actively confuse LSAT takers. We wrote a whole post about why this is bad LSAT advice. On some questions, you want to avoid “out-of-scope” answers. But every single answer choice will be “out-of-scope” on far more questions. Obviously, this advice isn’t very helpful on those questions.

- Circular reasoning: I think our computer friend got a little confused here. Answer choices rarely present circular arguments. Circular arguments “presuppose what they set out to prove.” In other words, they try to prove that a conclusion is true with evidence that assumes that we already accept that the conclusion is true. “Clearly, pineapple is the best pizza topping. Anyone who disagrees clearly hasn’t tried pineapple on their pizza, which is obviously the best pizza topping.” Such arguments sometimes appear in Logical Reasoning passages. When these arguments appear in the answer choices, it’s usually on a Flawed Parallel Reasoning question. If we’re attempting to parallel a circular argument from the passage, a circular argument in an answer choice would be correct.

- Confusing answers: Yeesh. Let’s put aside the problem that which answer choices qualify as confusing varies wildly from student to student. Even then, the idea that a confusing answer is wrong is totally preposterous. On some of the harder questions, the test writers deliberately make the correct answers sound confusing (and, inversely, the incorrect answers sound superficially appealing) to ensure that most test-takers select the wrong answers. If you follow the advice of Mr. Neural Network over here, you’re going to get a lot of the hardest questions wrong, and you’ll find it nearly impossible to break into the 170s.

I probably wouldn’t let a human tutoring candidate who made this many factual blunders in their interview advance much further in the interview. But, ChatGPT is still in its relative infancy, so I couldn’t help but treat it with kids’ gloves. I decided to extend it some leeway and ask some follow-up questions about Logic Games and Reading Comp.

Here’s what I asked about Logic Games:

“Thanks. You also mentioned that this student should learn the different types of Logic Games (e.g., sequencing, grouping, hybrid). Could you briefly describe the differences between sequencing, grouping, and hybrid games?”

Here’s ChatGPT’s response:

A little bit of redemption for our digital friend! ChatGPT provided a pretty good description of how these games differ.

I could tell its wires got a little frayed when delving into the “variations and subtypes” of sequencing, grouping, and hybrid games. Its definition for one of the grouping game “subtypes” — those “where some items must be placed in a specific order within each group” — is the exact same as its definition for hybrid games. Its definition of grouping games with “numerical distribution [sic]” is a bit off. And it failed to bring up two important variations of “sequencing or linear” games: “multi-linear games” (where we’re ordering more than one set of variables or ordering variables with different characteristics) and linear games with numerical distributions.

But let’s not dwell too much on the negative here. Let’s see if Big AI can attain similar redemption with its Reading Comp advice. Here’s what I asked it:

“Thanks. Finally, you mentioned that timing is important in all three sections. Can you provide any tips for how to get faster in Reading Comprehension?”

Here’s its response:

Ahh, sorry Mr. GPT, I gotta step in and correct you here. Two pieces of your advice — skimming the passage and reading the questions first — are enormous wastes of time for any test-taker trying to speed up on Reading Comp.

Skimming the passage is just a fast track to misunderstanding the passage. Besides, even ChatGPT knows that you need to have a working summary of “each paragraph,” make “connections between different parts of the passage,” and “pay attention to the author's tone and purpose.” How are you going to pick up on those nuanced points if you’re rushing through an admittedly “dense and complex” passage?

And reading the questions first is, unfortunately, not the hack ChatGPT thinks it is. Most of the questions are phrased vaguely. These questions don’t ask about a specific topic from the passage. They ask for the passage’s “main point,” something that the “passage suggests,” or a statement the author “would agree with.” These questions won’t reveal which part of the passage you should concentrate on. And when questions bring up a topic, it’s often a topic that the author discussed throughout the entire passage. And, perhaps more to the point, most correct answers require you to synthesize multiple parts of the passage. This is why you can’t word-search your way to the correct answers on most questions.

— — — — —

Perhaps I’m being too hard on ChatGPT. After all, it’s only summarizing the advice written by many real LSAT students and teachers. Unfortunately, as we’ve discussed severaltimesnow, there’s a lot of bad LSAT advice out there. ChatGPT, although undeniably smart, has trouble distinguishing between reliable and unreliable advice.

So, overall, I think a trusted human tutor who has a nuanced understanding of this test and has acquired experience helping many students raise their scores is preferable to a robot. Fortunately, I know of a few such humans.